Harvard CS197 AI Research Experiences

Disclaimer: I didn’t own any of the contents and it all belongs to the rightful owner credited/ mentioned/ referenced at the end of the post.

Brief introduction

This is a course ran by Harvard, instructed by Professor Pranav Rajpurkar.

Dive into cutting-edge development tools like PyTorch, Lightning, and Hugging Face, and streamline your workflow with VSCode, Git, and Conda. You’ll learn how to harness the power of the cloud with AWS and Colab to train massive deep learning models with lightning-fast GPU acceleration. Plus, you’ll master best practices for managing a large number of experiments with Weights and Biases. And that’s just the beginning! This course will also teach you how to systematically read research papers, generate new ideas, and present them in slides or papers. You’ll even learn valuable project management and team communication techniques used by top AI researchers. Don’t miss out on this opportunity to level up your AI skills.

Chapter 1 & 2: Introduction to AI and Setting up environment

Typical, intuitive guides to interact with AI and its research + development. Working environment requires source-code version control (Git), environment control (Conda) and editors (VSCode).

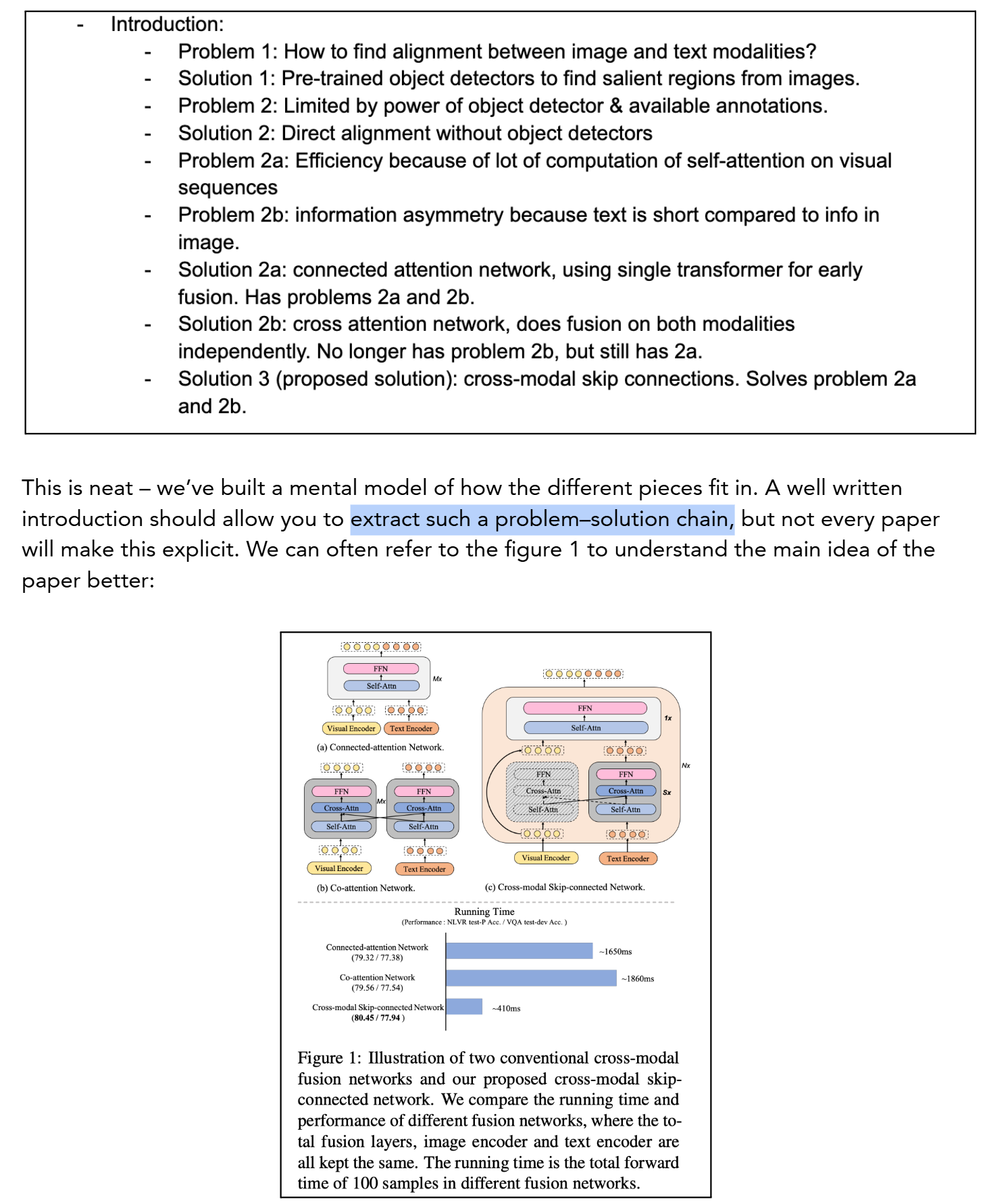

Chapter 3: Reading AI Research Papers

Objectives:

- Conduct a literature search to identify papers relevant to a topic of interest

- Read a machine learning research paper and summarize its contributions

Chapter's content:

TLDR: Read wide for learning, read deep for improving. Take incremental approach.

I’m going to break down the process of reading AI research papers into two pieces: reading wide, and reading deep. When you start learning about a new topic, you typically get more out of reading wide: this means navigating through literature reading small amounts of individual research papers. Our goal when reading wide is to build and improve our mental model of a research topic. Once you have identified key works that you want to understand well in the first step, you will want to read deep: here, you are trying to read individual papers in depth. Both reading wide and deep are necessary and complimentary, especially when you’re getting started.

Read wide:

Paperswithcode (AI/ML) | Google scholar | ACM Digital Lib > Taking notes > Check benchmark with recent submissions > Check SOTA > Check dataset (in any order) > Read em and take notes.

Results: Notes condensed with information, may take up to 2-3 hours doing this.

At this point, I find myself particularly intrigued by the SOTA methods: Why are they achieving high performance? According to the review paper, it looks like “training strategies using pre-training” have been an advance. Maybe that’s worth keeping an eye out for!

Read deep:

So I would like you to take an incremental approach here. Understand that, in your first pass, you will not understand more than 10% of the research paper. The paper may require us to read another more fundamental paper (which might require reading a third paper and so on; it could be turtles all the way down)! Then, in your second pass, you might understand 20% of the paper. Understanding 100% of a paper might require a significant leap, maybe because it’s poorly written, insufficiently detailed, or simply too technically/mathematically advanced. We thus want to aim to build up to understanding as much of the paper as possible – I’ll bet that 70-80% of the paper is a good target.

Define your own highlight strategy, for example:

- The yellow highlights are the problems/challenges

- The pink highlights are the solutions to the challenges

- The orange highlights are the main contributions of the work we’re reading.

Some useful tips:

-

Read deep: do a three-pass approach.

First, take a quick scan through the title, put some brief thought into it. Read the abstract, introduction, section headings. Identify the research question, the thematics, the papers’ contribution. Look through the figures for visual details. Glance through discussion if exists. Read the conclusion and see if the research question have been solved (?)

Second, focus on the introduction content, preliminary. Grasp the underlying content in methodology section and the results. Take notes on key points. Skip math terms if too heavy. The goal is to understand the paper’s content without getting bogged down in details.

This pass is a thorough, line-by-line reading with the aim of understanding every detail and challenging the author’s assumptions and claims. This pass is particularly useful for papers that require a deep understanding or are central. Re-implement the paper’s work/ identify areas for improvement. -

Read wide: If doing a literature review/ search, just do the first pass then look for referenced sources, mentioned SOTA methods, prominent proposed dataset/ benchmark, …

-

You would have thus created a list of concepts you need to learn about, and the relevant paper for each, if the paper specifies any.

Chapter 4: Fine-tuning a Language Model using Hugging Face

Objectives:

- Load up and process a natural language processing dataset using the datasets library.

- Tokenize a text sequence, and understand the steps used in tokenization.

- Construct a dataset and training step for causal language modeling.

Chapter's content:

TLDR: HuggingFace’s Dataloader -> Tokenize -> Train/Test split -> Train and Evaluate -> Upload to model hub

HuggingFace

- Community/ Data science center for building, training and deploying ML models based on open src software.

- Guide 1: Causal language mdeoling and Guide 2: Code on how to fine tune.

Load up a dataset

HF’s Datasets library’s 3 main feats:

- Load/ process data from CSV/JSON/text or python dict/pandas.DataFrame

- Access/ share datasets (through HF’s hub)

- Can be used with DL frameworks (pandas, numpy, pytorch/ tensorflow) (interoperable - new vocab hehe)

SQuAD (Stanford Question Answering Dataset) dataset:

- Reading comprehension

- Questions posed by crowdworkers on a set of Wikipedia articles

- Answer is a segment of text, span from corresponding reading passage; or unanswerable.

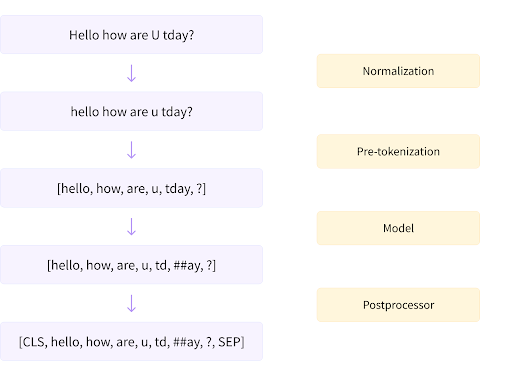

Tokenize

A tokenization pipeline in huggingface comprises several steps:

- (1) Normalization (any cleanup of the text that is deemed necessary, such as removing spaces or accents, Unicode normalization, etc.)

- (2) Pre-tokenization (splitting the input into words)

- (3) Running the input through the model (using the pre-tokenized words to produce a sequence of tokens)

- (4) Post-processing (adding the special tokens of the tokenizer, generating the attention mask and token type IDs).

The above steps show how we can go from text into tokens. There are multiple rules that govern the process that are specific to certain models. For tokenization, there are three main subword tokenization algorithms: BPE (used by GPT-2 and others), WordPiece (used for example by BERT), and Unigram (used by T5 and others); we won’t go into any of these, but if you’re curious, you can learn about them here.

Data Processing

For causal language modeling (CLM), one of the data preparation steps often used is to concatenate the different examples together, and then split them into chunks of equal size. This is so that we can have a common length across all examples without needing to pad.

From

["I went to the yard.<|endoftext|>","You came here a long time ago from the west coast.<|endoftext|>"],

we might change this to:

["I went to the yard.<|endoftext|>You came here", "a long time ago from the west coast.<|endoftext|>"]

Finetuning and setup Trainer

Code sample:

from transformers import AutoModelForCausalLM, TrainingArguments, Trainer

model = AutoModelForCausalLM.from_pretrained("distilgpt2")

training_args = TrainingArguments(

f"{model_checkpoint}-squad",

eval_strategy="epoch",

learning_rate=2e-5,

weight_decay=0.01,

push_to_hub=False,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=small_train_dataset,

eval_dataset=small_eval_dataset,

)

trainer.train()

Model Evaluation

Because we want our model to assign high probabilities to sentences that are real, and low probabilities to fake sentences, we seek a model that assigns the highest probability to the test set. The metric we use is ‘perplexity’, which we can think of as the inverse probability of the test set normalized by the number of words in the test set. Therefore, a lower perplexity is better.

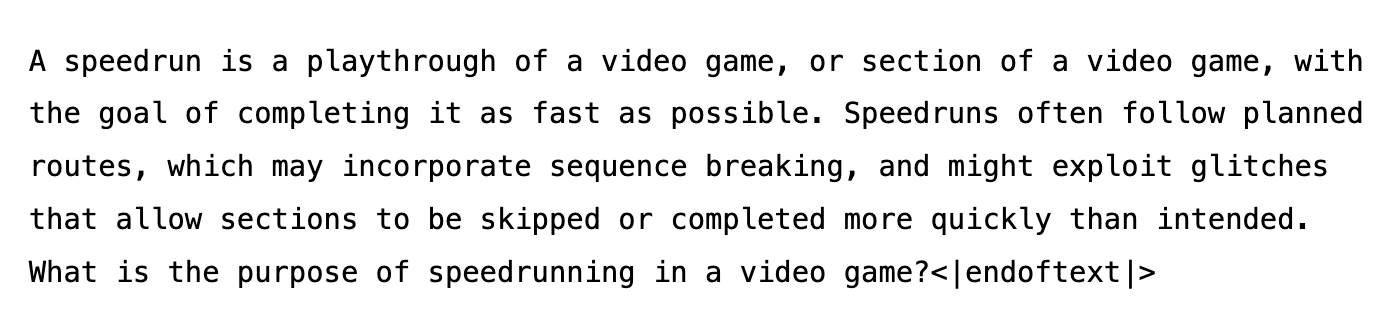

Generation with our fine-tuned model

Code sample:

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained(f"rajpurkar/{model_checkpoint}-squad")

tokenizer = AutoTokenizer.from_pretrained(f"rajpurkar/{model_checkpoint}-squad")

start_text = ("A speedrun is a playthrough of a video game, \

or section of a video game, with the goal of \

completing it as fast as possible. Speedruns \

often follow planned routes, which may incorporate sequence \

breaking, and might exploit glitches that allow sections to \

be skipped or completed more quickly than intended. ")

prompt = "What is the"

inputs = tokenizer(

start_text + prompt,

add_special_tokens=False,

return_tensors="pt")["input_ids"]

prompt_length = len(tokenizer.decode(inputs[0]))

outputs = model.generate(

inputs,

max_length=100,

do_sample=True,

top_k=50,

top_p=0.95,

temperature=0.9,

num_return_sequences=3)

generated = prompt + tokenizer.decode(outputs[0])[prompt_length + 1:]

print(tokenizer.decode(outputs[0]))

Results:

Chapter 5: Fine-tuning a Vision Transformer using Lightning

Objectives:

Learning outcomes: - Interact with code to explore data loading and tokenization of images for Vision Transformers.

- Parse code for PyTorch architecture and modules for building a Vision Transformer.

- Get acquainted with an example training workflow with PyTorch Lightning.

Reading code is often an effective way of learning. Today we will step through an image classification workflow with Vision transformers. We will parse code to process a computer vision dataset, tokenize inputs for vision transformers, and build a training workflow using the Lightning (PyTorch Lightning) framework. You might be used to learning about a new AI framework with simple tutorials first that build in complexity. However, in research settings, you’ll often be faced with using codebases that use unfamiliar frameworks. Our lecture today reflects this very setting, and is thus structured as a walkthrough where you will be exposed to code that uses Pytorch Lightning and then proceed to understand parts of it.

Example notebook:

Chapter's content:

TLDR: Data Loading

Lightning

- Lightning is a framework for PyTorch that provides a high-level interface for training models.

- It allows you to write less boilerplate code and focus on the model architecture and training logic

Basic skills in Lightning:

- Train a model

- Add validation and test

- Use pretrained models

- Enable script parameters

- Understand and visualize your model

- Predict with your model

Pipeline

Chapter 6 & 7: Solidifying PyTorch Fundamentals

Chapter 8 & 9: Organizing Model Training with Weights & Biases and Hydra

Chapter 10 & 11: A Framework for Generating Research Ideas

Chapter’s featured papers:

- Expert-level detection of pathologies from unannotated chest X-ray images via self-supervised learning

- CLIP (Learning Transferable Visual Models From Natural Language Supervision)

Objectives:

- Identify gaps in a research paper, including in the research question, experimental setup, and findings.

- Generate ideas to build on a research paper, thinking about the elements of the task of interest, evaluation strategy and the proposed method.

- Iterate on your ideas to improve their quality.

Chapter's content:

/** I’ll save this for my AIC task **/

Chapter 12 & 13: Structuring a Research Paper

Chapter 14 & 15: AWS EC2 for Deep Learning: Setup, Optimization, and Hands-on Training with CheXzero

Chapter 16 & 17: Fine-Tuning Your Stable Diffusion Model

Chapter 18: Tips to Manage Your Time and Efforts

Chapter 19: Making Progress and Impact in AI Research

Chapter 20: Tips for Creating High-Quality Slides

Chapter 21: Statistical Testing to Compare Model Performances

Exercises:

Assignments:

Assignment 1: The Language of Code

Assignment 2: First Dive in AI

Assignment 3: Torched

Assignment 4: Spark Joy

Assignment 5: Ideation and Organization

Assignment 6: Stable Diffusion and Research Operations

Course Project:

[Redacted]

References:

Enjoy Reading This Article?

Here are some more articles you might like to read next: